|

If you are eligible to use the LASC, you must register first to obtain an account. After registration, you will be provided with personal username and password.

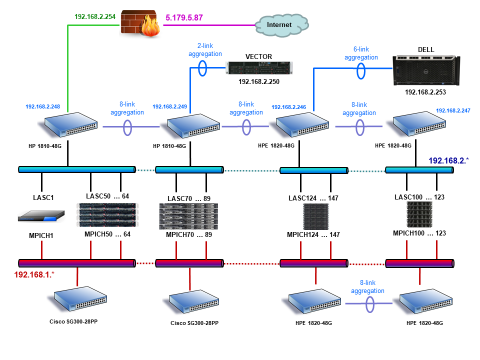

To access the cluster, use the LASC hostname IP address: 5.179.5.87. Note that cluster is accessible only via SSH (or Secure SHell) protocol version 2. In UNIX/LINUX environment, you can connect to the cluster using ssh:

After logging in, you will be at a Red Hat Linux shell prompt. Users type commands at a shell prompt,

the shell interprets these commands, and then the shell tells the operating system what to do.

Experienced users can write shell scripts to expand their capabilities even more.

The default shell for Red Hat Linux is the Bourne Again Shell, or bash. You can learn more about bash by reading the bash man page (type man bash at a shell prompt). Several often used commands are described below:

Before using MPI demanding programs, you must first set up the SSH environment to be able to connect to any cluster node without password. This can be done following these steps:

To run your application in interactive mode, simply type at a shell prompt

To start application in background mode, type

If you need that your application will continue to work in background mode after you logout from the system, type

To measure the run time of your application, use the time command (see man time for details). For example, use the following command to run time consuming applications from your home directory:

1) elapsed real time, 2) total number of CPU-seconds that the process spent in user mode; 3) total number of CPU-seconds that the process spent in kernel mode.

When you are logged into the cluster (see System Access above), you can access all cluster nodes via Gigabit Ethernet network (192.168.2.*) using RSH and SSH protocols. The names and IP addresses of the nodes are (all in lowercase !):

Here gateway means the Firewall used to connect the cluster to the Internet. The gateway is "transparent" for users, that means you cannot logon to the gateway. The node lasc1 is the one you are logged in first. To connect to other nodes, you must use rsh (or ssh) command. For example, use the following command to connect to node lasc50:

N.B. Always use the above lasc* names to log into the nodes: they are automatically recognized.

After login, you will have an access to your /home directory.

The /home directory is exported to other nodes via 6-link aggregated Gigabit Ethernet channels using NFS.

| ||||||||||||||||||||

|

These pages are maintained by Alexei Kuzmin

(a.kuzmin@cfi.lu.lv).

Comments and suggestions are welcome.

|